From Shadow AI to Prompt Injection and Model Poisoning: The New Threat Landscape for AI-Enabled Enterprises

When Your Custom AI Becomes the Attack Vector – Why Building Secure-by-Design Matters Just as Much as Speed to Market.

Enterprises across every sector are rushing to build custom AI capabilities; from internal chatbots and AI agents that streamline operations to customer-facing agents that transform service delivery. Yet whilst organisations focus on capturing AI’s transformative potential, many are inadvertently expanding their attack surface and introducing risks that didn’t exist just months ago.

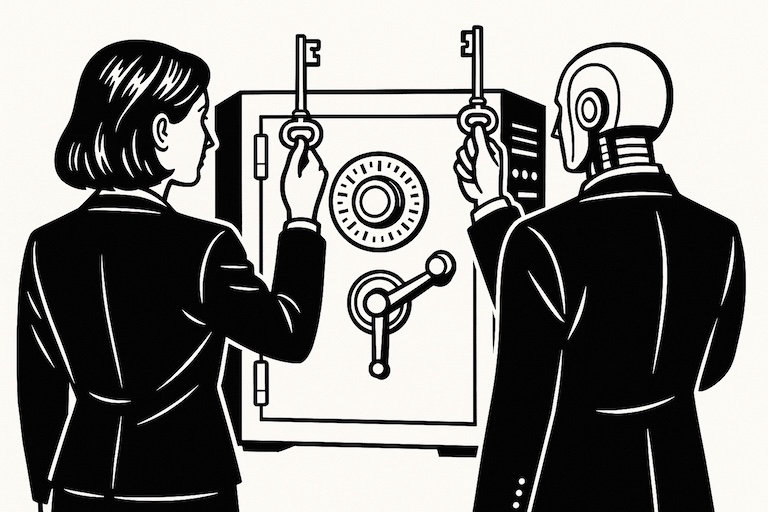

This shift represents a fundamental change in how we must approach cybersecurity. AI innovation is no longer just a technology consideration. It’s become a competitive imperative that directly impacts market position. The organisations that can innovate fastest whilst maintaining robust security will build insurmountable advantages over those that either move too slowly or compromise on security in order to deploy quickly.

Beyond Third-Party AI: The Custom Development Challenge

The ‘Shadow AI’ challenge remains significant, but this article focuses on a different frontier: the security implications of developing and deploying your own large language model applications and autonomous agents.

These custom AI systems, whether internal tools for employees or customer-facing applications, introduce entirely new categories of risk. Unlike third-party AI services with established security and data loss prevention measures, custom implementations require organisations to build security considerations into every layer of their AI stack.

The New Threat Vectors

The threats and mitigation strategies outlined below are intended as a top-level introduction. These topics will be covered in more detail at the Secure by Design event by experts from Palo Alto Networks and Unit 42 who work at the bleeding edge of this evolving landscape. Further reading resources are also provided at the bottom of the article for those who want a deeper dive on the full scope of AI-related threats and risk management best practices.

And needless to say, there is no substitute for real-world experience from innovation and security leaders actually addressing these challenges (see a full list of our event speakers here).

Prompt Injection Attacks

Perhaps the most immediate threat facing custom LLM deployments is prompt injection. Attackers craft malicious inputs designed to manipulate model behaviour, effectively bypassing safety controls or extracting sensitive information. Think of it as the AI equivalent of SQL injection attacks.

These attacks can range from simple “jailbreaking” prompts that override model guidelines to sophisticated indirect injections where malicious instructions are hidden in data the model processes. For instance, an attacker might embed invisible instructions in a document that, when processed by an AI system, cause it to leak confidential information.

Mitigation strategies include implementing robust input sanitisation frameworks, deploying context-aware monitoring to detect unusual prompt patterns, and ensuring all AI outputs undergo structured validation before being acted upon.

Autonomous Agent Compromise

As AI agents become more sophisticated and autonomous, they present unique security challenges. To be effective, an agent must have access to company data. Like a human user, with access comes risk. These agents can be compromised through indirect prompt injection attacks or memory poisoning, effectively turning them into malicious insiders with legitimate access to systems and data.

A compromised autonomous agent is particularly dangerous because it operates with the permissions and trust levels of the user or system it represents and does so at software speed. Mitigation approaches include implementing hardware-enforced sandboxing to isolate agent actions, creating segregated data silos that contain only the data required by the agent, deploying behavioural anomaly detection systems, and maintaining immutable audit trails of all agent decisions and actions.

AI-Generated Code Vulnerabilities

Development teams increasingly rely on LLM code generators to accelerate software development, but this convenience comes with significant security trade-offs. Studies indicate that a significant proportion (up to 40%) of LLM-generated code samples contain security weaknesses, including SQL injection flaws, insecure data handling, and hardcoded credential, or other vulnerabilities that make privilege escalation or data exfiltration more likely. Additionally, LLMs can exhibit “contextual blindness”, generating syntactically correct code that’s fundamentally inappropriate for the specific security context or business logic.

The risks extend beyond simple coding errors. LLMs may inadvertently expose sensitive information, reproduce copyrighted code leading to licence violations, or introduce insecure dependencies that expand the attack surface. Over-reliance on code generators can also create a false sense of security, with developers integrating generated code without sufficient understanding or review. As the AI-generated share of code within a code-base increases, this becomes increasingly challenging to manage.

Effective safeguards include mandatory human code review with security focus, automated vulnerability scanning specifically designed for AI-generated code, comprehensive licence compliance checking, rigorous supply chain management for any new dependencies, and developer training programmes that emphasise the unique risks of LLM-generated code.

Excessive Agency and Overautonomy

Perhaps the most subtle but potentially catastrophic risk comes from granting AI systems too much autonomy without adequate safeguards. Simply providing access to more company data as a means to improve performance can be tempting for developers, while the pressure from management to deploy quickly can reduce scrutiny. Overly autonomous agents can make harmful decisions faster than humans can intervene, particularly in high-stakes environments.

Balanced approaches include implementing human-in-the-loop guardrails for critical decisions, designing dynamic privilege scaling that adjusts agent permissions based on risk context, and establishing clear boundaries around what actions agents can take autonomously.

AI Supply Chain Attacks

Modern AI development heavily relies on pre-trained models, third-party libraries, and external datasets. Each component in this supply chain represents a potential attack vector. Compromised foundation models or malicious plugins can introduce vulnerabilities throughout the entire AI stack.

Protection strategies include maintaining comprehensive Software Bills of Materials (SBOM) for all AI components, implementing runtime integrity checks, and establishing rigorous vetting processes for all external AI components.

Training Data Poisoning

When organisations train custom models or fine-tune existing ones, they become vulnerable to data poisoning attacks. Adversaries can inject malicious content into training datasets, corrupting the model’s behaviour in subtle but significant ways. This might manifest as biased outputs, hidden backdoors, or models that behave normally most of the time but exhibit malicious behaviour under specific conditions.

The challenge lies in the scale. Modern AI training involves vast datasets that make manual verification impractical. Effective defences include implementing comprehensive data provenance tracking, using adversarial training techniques to expose models to potential poisoning attempts, and incorporating synthetic data generation to reduce reliance on potentially compromised external sources.

Model Theft and Extraction

Proprietary AI models represent significant intellectual property investments. Attackers can attempt to reverse-engineer these models through sophisticated API querying techniques or by extracting model weights directly. Successful model theft not only compromises competitive advantage but can also expose the organisation to further attacks using their own AI capabilities.

Protection measures include implementing intelligent rate limiting and query monitoring, embedding digital watermarks in model outputs for tracking, and applying differential privacy techniques that add carefully calibrated noise to outputs, making model extraction significantly more difficult.

Making AI Security a First-Class Citizen

The key to navigating these threats successfully lies in treating AI security as a “first-class citizen” throughout the development lifecycle. This means embedding security considerations into every stage of AI development and deployment, from initial design through to production monitoring.

Rather than treating security as a separate checklist to be completed after development, successful organisations integrate security thinking into their AI development process from day one. This requires adopting ‘SecDevOps’ practices specifically adapted for AI systems, where security specialists work alongside data scientists and AI engineers throughout the project lifecycle.

This integrated approach means considering security implications during model selection, incorporating security testing into model validation processes, and designing AI architectures with security as a core requirement rather than an afterthought. It also means establishing clear governance frameworks that balance innovation velocity with robust risk management.

The Innovation Imperative

Here’s the crucial insight: cybersecurity leaders can either be enablers of innovation or bottlenecks. Those who embrace their role as innovation enablers, working collaboratively with AI development teams to build secure systems from the ground up, will help their organisations gain competitive advantage. Those who approach AI security purely as a risk management exercise will find themselves constantly playing catch-up as their organisations struggle to innovate at the pace the market demands.

The most successful enterprises will be those that recognise AI security not just as a defensive necessity, but as a competitive differentiator. The organisations that win in the ‘AI Transition’ will be those capable of developing innovation and security maturity in tandem.

Further Reading

For those seeking to deepen their understanding of AI security frameworks, these four resources provide comprehensive guidance:

Unit 42 (“AI Agents are Here. So are the Threats”) – Unit 42 (Palo Alto Network’s industry-leading threat intelligence, incident response and cyber risk division) draws on insights from analysis of 500 billion daily events to shed light on security vulnerabilities in agentic AI applications, detailing nine attack scenarios and offering mitigation strategies to enhance protection against threats like prompt injection and tool exploitation.

MITRE ATLAS (Adversarial Threat Landscape for AI Systems) – A comprehensive knowledge base of adversarial tactics and techniques against machine learning gen-AI systems. ATLAS provides detailed case studies of real-world attacks and maps them to the familiar MITRE ATT&CK framework, making it invaluable for security teams developing AI-specific threat models.

NIST Artificial Intelligence Risk Management Framework (AI RMF) – The gold standard for AI risk management, offering a structured approach to identifying, assessing, and mitigating AI-related risks. The framework emphasises trustworthy AI principles and provides practical guidance for implementing responsible AI governance across organisations.

OWASP Top 10 for Large Language Model Applications 2025 – The definitive list of security vulnerabilities specific to LLM applications, regularly updated to reflect the latest threat landscape. Each vulnerability includes detailed explanations, attack scenarios, and prevention strategies specifically tailored for development teams building LLM-based applications.